AI Machines

Machines

16. April 2021

Trans. 24.11.2024

Manfred Goschler

Summary

Written in 2021 and reflecting some of my thoughts during this time, this article addressed the growing influence of AI and discussed its applications, ethical considerations and societal impact. Various lectures gave the impression of an opaque nature of AI systems, also with potential risks, especially when decisions lack transparency or accountability. On the other hand, examples from industrial applications, such as predictive maintenance, illustrated the transformative potential of AI.

Ethical concerns about the integration of AI into society were discussed, with a focus on the importance of clear definitions and human responsibility. I had suggested using more of the term “machines” or “AI machines” for AI applications to demystify their role and emphasize human control.

To what extent this still holds today (2024) remains questionable.

AI Machines – An Excursion into Artificial Intelligence

Introduction to AI in Agriculture

About two years ago, as someone unfamiliar with current AI technology, I attended a lecture on AI applications in agriculture. The presentation showcased impressive results achieved through neural networks trained on large datasets, such as image recognition. What struck me most wasn’t just the outcomes but the fact that users of such AI programs often cannot explain how these results are generated.

Imagine an AI-powered program assessing a person’s creditworthiness and arriving at a negative conclusion due to erroneous reasoning within the system. If such decisions can’t be explained, it raises significant ethical and practical concerns. While humans sometimes make unexplainable decisions, we can generally turn to legal systems for recourse. With AI systems lacking adequate explanatory functions, we risk becoming overly dependent on these opaque, self-learning systems.

Complexity and Ethical Implications

AI systems operate through complex interactions between algorithms and data. This complexity often makes their processes hard to explain. If outcomes are based merely on statistical probabilities, is that sufficient? Or are we so impressed by these advancements that we neglect to question them?

Various sources highlight that the widespread adoption of AI will bring transformative changes to society, raising questions about values and norms. Everyday applications like image recognition, speech recognition, autonomous driving, and robotics underscore this shift. Initiatives like the European Commission’s Ethics Guidelines for Trustworthy AI (2018) and its White Paper on Artificial Intelligence (2020) further underline the importance of ethical considerations in AI.

Applications in Industry

During the 2021 digital edition of the German Hannover Messe, AI played a prominent role. For instance, predictive maintenance was presented as a key application. By analyzing sensor data with AI, companies can predict component wear and take early action to minimize machine downtime. This extends beyond fault detection into integrated logistical processes that automate decisions about part replacement, procurement, and repair. Such processes are likely to become commonplace, given the minimal technical hurdles and significant economic benefits.

The German Research Center for Artificial Intelligence (DFKI) was also present at the fair, showcasing its role as a (German) leading organization in AI software innovation. On the final day, a presentation about the CLAIRE Innovation Network emphasized AI’s societal relevance.

Ethics and Ambiguity

Through further research, I came across Gerd Scobel’s (a German journalist, television presenter, author and philosopher.)concise overview of AI ethics. Scobel described ethics as a “secular discipline,” much like computer science, that addresses key questions such as:

- What is “good”?

- What should we “do”?

- How should normative claims be examined?

These questions become especially pertinent in the context of digitalization and AI. Numerous examples in publications highlight the demand for ethical, safe, fair, and socially accepted AI systems. However, terms like “artificial intelligence,” “information,” and “digitalization” often lack clarity, leading to misinterpretations and generalized conclusions.

For instance, if AI is treated as a form of computer science technology, and fairness is demanded of it, this may indicate potential loss of control over these applications. Ethical concerns might stem more from technical issues, like insufficient data protection or transparency, than from AI itself.

Reframing AI as Machines

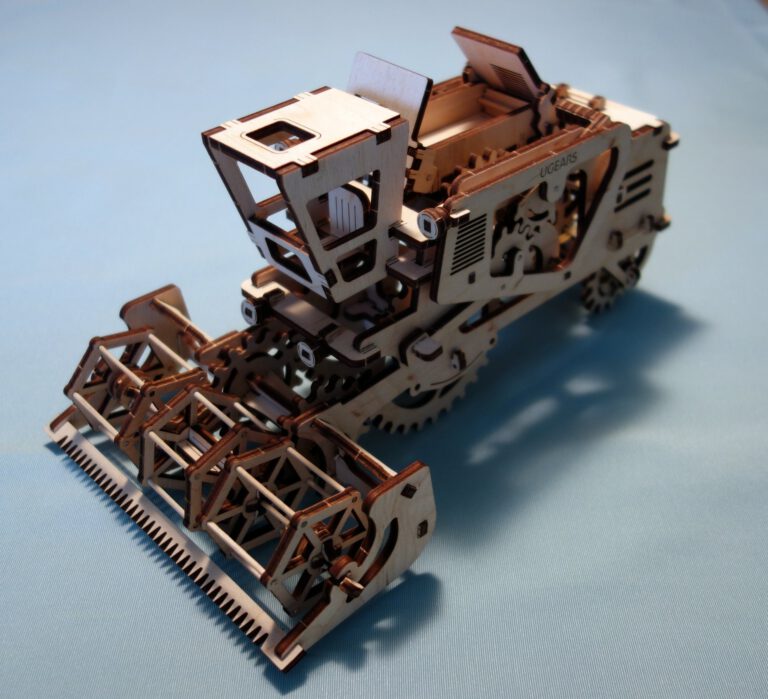

Given this ambiguity, I propose referring to AI applications as “machines.” The term “machine” is well-established in the physical world and increasingly relevant in the virtual domain. Using this term may help clarify distinctions between humans and artificial systems, while avoiding the baggage of overloaded or vague terms like AI.

Machines have long been integrated into our lives, governed by practical experience, guidelines, and regulations. Framing AI as machines allows us to treat them as objects rather than subjects, reinforcing the notion that humans—not AI—make decisions and bear responsibility.

Looking Ahead

As a non-expert, I would like to learn more about our digital future, for example how the European Commission envisions its AI strategy.